BigQuery Connector Example¶

The WSO2 BigQuery connector is mostly comprised of operations that are useful for retrieving BigQuery data such as project details, datasets, tables, and jobs (it has one operation that can be used to insert data into BigQuery tables).

In this example we are trying to build up a sample scenario based on the BigQuery Table operations.

What you'll build¶

Given below is a sample scenario that demonstrates how to work with the WSO2 BigQuery Connector:

- The user sends the request to invoke an API to get created table details from the BigQuery. This REST call will retrieve schema level information and send it back to the API caller.

- Insert data in to the created table.

- Retrieve inserted details from the BigQuery table.

- Run an SQL query (BigQuery) and retrieve details from BigQuery table.

All four operations are exposed via an bigquery-testAPI API. The API with the context /resources has four resources.

/gettabledetails: This is used to get get created table details from the BigQuery table by ID./insertdetails: This is used to inserts the data into the table./getdetails: This is used to retrieves table data from a specified set of rows./runQuery: The runQuery operation runs an SQL query (BigQuery) and returns results if the query completes within a specified timeout.

Note: Before starting this scenario, you need to create a project in BigQuery. Next, create a Dataset and under that Dataset you have to have Table. For more information about these operations, please refer to the Setting up the BigQuery Environment.

The following diagram shows the overall solution. User can invoke the table schema level details from the gettabledetails resource. Using the response details, the API caller can insert data into the created table. If users need to retrieve table data from a specified set of rows, they need to invoke the getdetails resource. Finally /runQuery resource runs an SQL query (BigQuery) and returns results back to the API caller.

If you do not want to configure this yourself, you can simply get the project and run it.

Configure the connector in WSO2 Integration Studio¶

Connectors can be added to integration flows in WSO2 Integration Studio. Once added, the operations of the connector can be dragged onto your canvas and added to your resources.

Import the connector¶

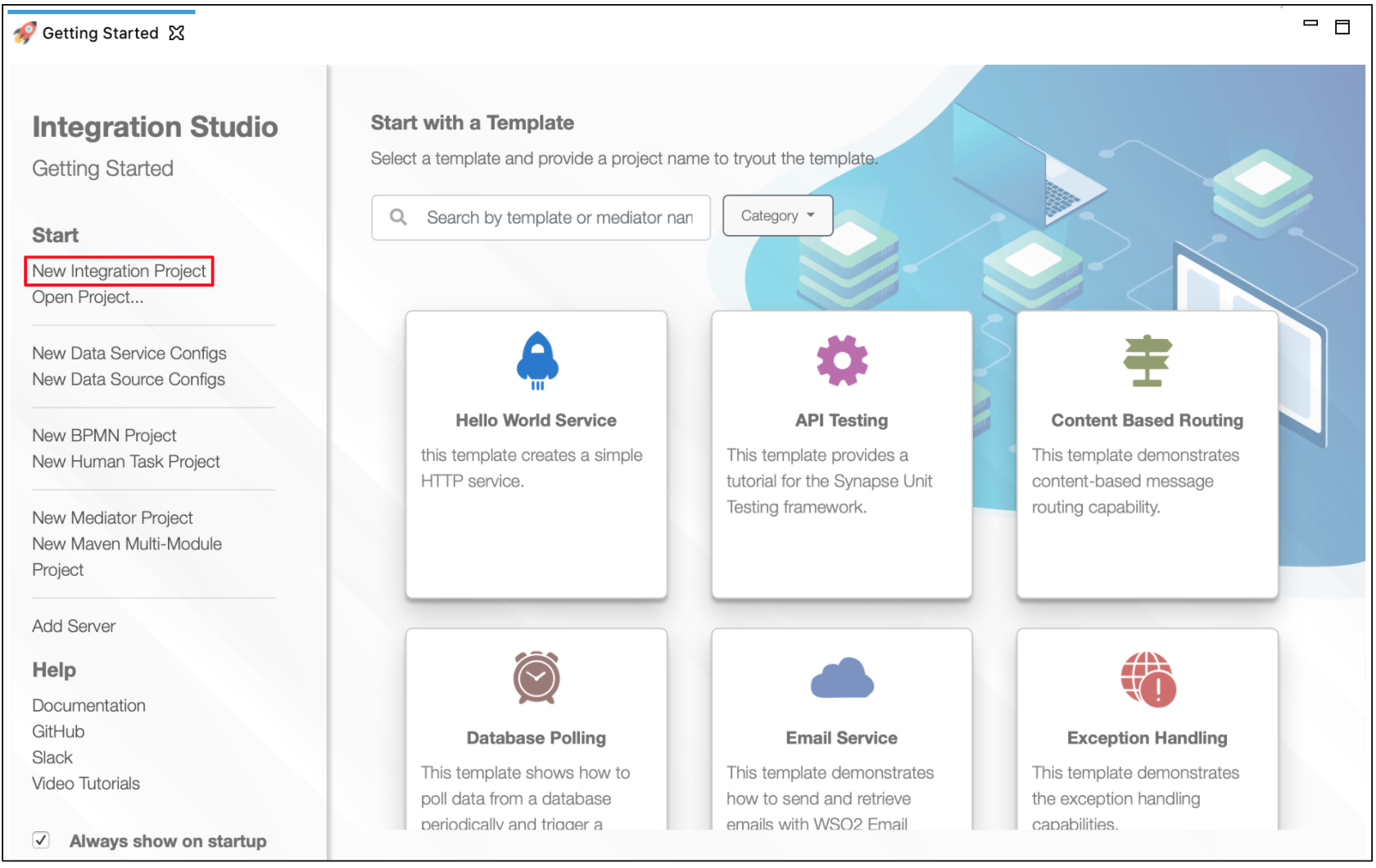

Follow these steps to set up the Integration Project and the Connector Exporter Project.

-

Open WSO2 Integration Studio and select New Integration Project to create an Integration Project.

-

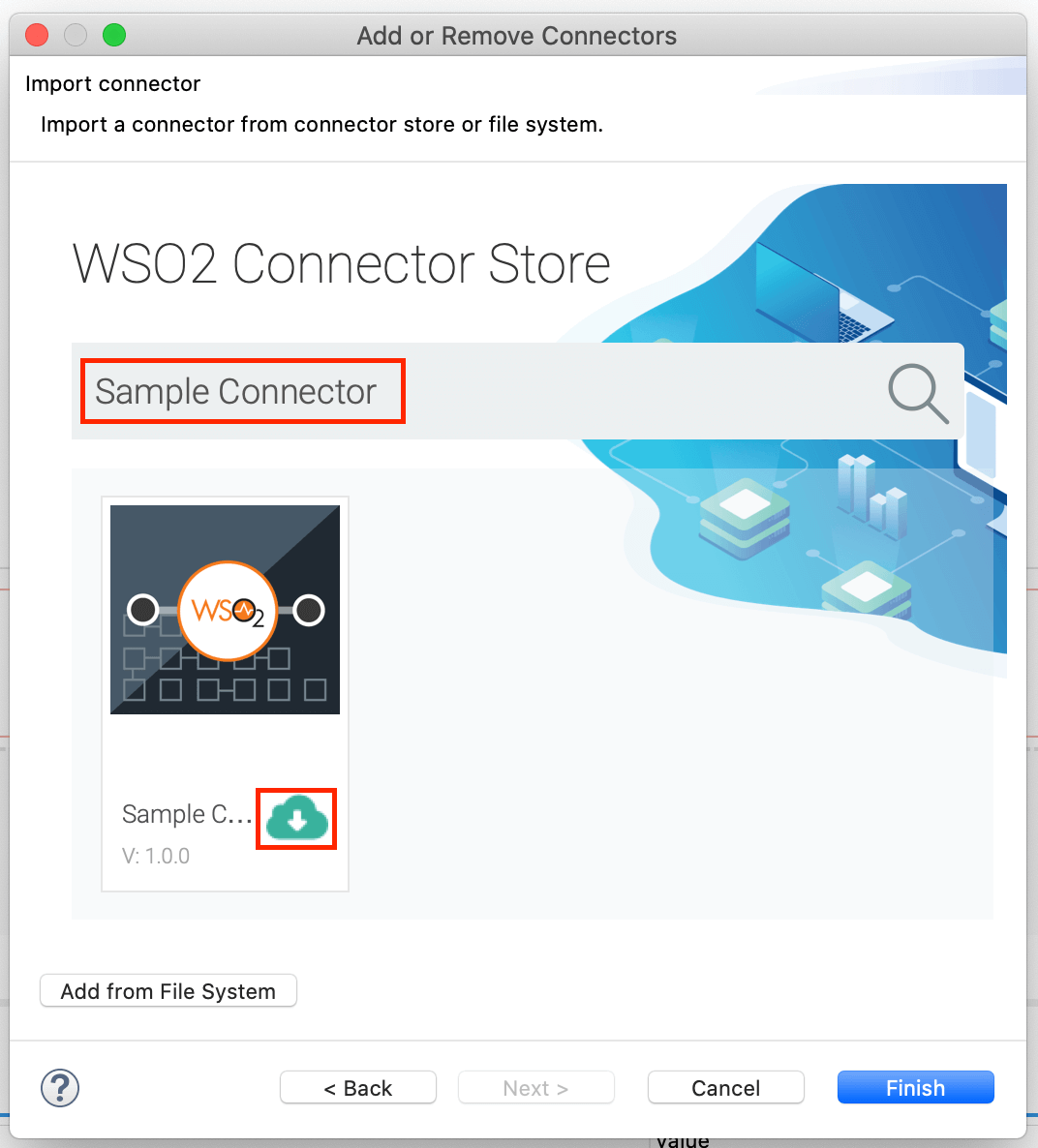

Right-click the project that you created and click on Add or Remove Connector -> Add Connector. You will get directed to the WSO2 Connector Store.

-

Search for the specific connector required for your integration scenario and download it to the workspace.

-

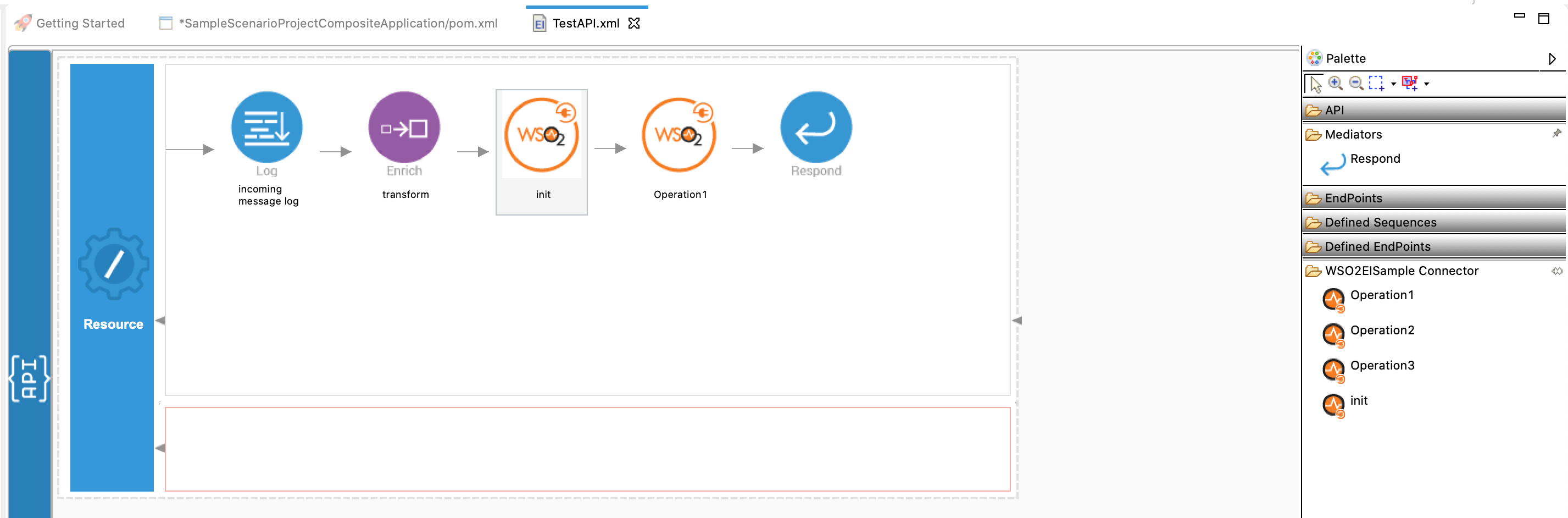

Click Finish, and your Integration Project is ready. The downloaded connector is displayed on the side palette with its operations.

-

You can drag and drop the operations to the design canvas and build your integration logic.

-

Right click on the created Integration Project and select New -> Rest API to create the REST API.

Add integration logic¶

First create an API, which will be where we configure the integration logic. Right click on the created Integration Project and select, New -> Rest API to create the REST API. Specify the API name as bigquery-testAPI and API context as /resources.

Configuring the API¶

Configure a resource for the gettabledetails operation¶

Create a resource that to invoke an API to get created table details from the BigQuery. To achieve this, add the following components to the configuration.

-

Initialize the connector.

-

Navigate into the Palette pane and select the graphical operations icons listed under BigQuery Connector section. Then drag and drop the

initoperation into the Design pane.

-

Add the property values into the

initoperation as shown below. Replace theapiUrl,accessToken,clientId,clientSecret,refreshToken,apiKey,callback, andprettyPrintwith your values.- apiUrl: The base endpoint URL of the BigQuery API.

- accessToken: The OAuth token for the BigQuery API.

- clientId : The client ID for the BigQuery API.

- clientSecret : The client Secret for the BigQuery API.

- refreshToken : The refresh token for the BigQuery API.

- apiKey : The API key. Required unless you provide an OAuth 2.0 token.

- callback : The name of the JavaScript callback function that handles the response. Used in JavaScript JSON-P requests.

- prettyPrint : Returns the response with indentations and line breaks. If the property is true, the response is returned in a human-readable format.

-

-

Set up the getTable operation. This operation retrieves a table by ID.

-

Navigate into the Palette pane and select the graphical operations icons listed under BigQuery Connector section. Then drag and drop the

getTableoperation into the Design pane.

-

In this operation we are going to get a BigQuery table details.

- datasetId : The dataset ID of the requested table.

- projectId : The project ID of the requested table.

- tableId : The ID of the requested table.

In this example, the above

datasetId,projectIdandtableIdparameter values are populated as an input value for the BigQuerygetTableoperation.

-

-

To get the input values in to the

getTable, we can use the property mediator. Navigate into the Palette pane and select the graphical mediators icons listed under Mediators section. Then drag and drop thePropertymediators onto the Design pane as shown below.

> Note: The properties should be added to the pallet before creating the operation.The parameters available for configuring the Property mediator are as follows:

-

Add the property mediator to capture the

tableIdvalue. The 'tableId' contains the ID of the requested table.- name : tableId

- value expression : json-eval($.tableId)

-

Add the property mediator to capture the

datasetIdvalues. The 'volume' contains stock quote volume of the selected company.- name : datasetId

- value expression : json-eval($.datasetId)

-

Add the property mediator to capture the

projectIdvalues. The 'volume' contains stock quote volume of the selected company.- name : projectId

- value expression : json-eval($.projectId)

-

-

Forward the backend response to the API caller.

When you are invoking the created resource, the request of the message is going through the

/gettabledetailsresource. Finally, it is passed to the Respond mediator. The Respond Mediator stops the processing on the current message and sends the message back to the client as a response.-

Drag and drop respond mediator to the Design view.

-

Once you have setup the resource, you can see the

gettabledetailsresource as shown below.

-

Configure a resource for the insertdetails operation¶

-

Initialize the connector. You can use the same configuration to initialize the connector. Please follow the steps given in section 1 for setting up the

initoperation to thegettabledetailsoperation. -

Set up the insertAllTableData operation. Navigate into the Palette pane and select the graphical operations icons listed under BigQuery Connector section. Then drag and drop the

insertAllTableDataoperation into the Design pane. TheinsertAllTableDataoperation inserts the data into the table.- datasetId : The dataset ID of the requested table.

- projectId : The project ID of the requested table.

- tableId : The ID of the requested table.

- skipInvalidRows : A boolean value to check whether the row should be validated.

- ignoreUnknownValues : A boolean value to validate whether the values match the table schema.

- jsonPay : A JSON object that contains a row of data.

-

To get the input values in to the

getTable, we can use the property mediator. Navigate into the Palette pane and select the graphical mediators icons listed under Mediators section. Then drag and drop thePropertymediators onto the Design pane as shown below.

The parameters available for configuring the Property mediator are as follows:

-

Add the property mediator to capture the

datasetId,projectId,tableIdvalues. Please follow the steps given ingettabledetailsoperation section 3. -

Add the property mediator to capture the

datasetIdvalues. The 'volume' contains stock quote volume of the selected company.- name : jsonPay

- value expression : json-eval($.jsonPay)

In this example,

skipInvalidRowsvalue is configured as true andignoreUnknownValuesvalue is configured as true. -

Forward the backend response to the API caller. Please follow the steps given in section 4 in the

gettabledetailsoperation.

Configure a resource for the listTabledata operation¶

-

Initialize the connector. You can use the same configuration to initialize the connector. Please follow the steps given in section 1 for setting up the

initoperation to thegettabledetailsoperation. -

Set up the listTabledata operation. Navigate into the Palette pane and select the graphical operations icons listed under BigQuery Connector section. Then drag and drop the

listTabledataoperation into the Design pane. ThelistTabledataoperation retrieves table data from a specified set of rows.- datasetId : The dataset ID of the requested table.

- projectId : The project ID of the requested table.

- tableId : The ID of the requested table.

-

To get the input values in to the

listTabledata, we can use the property mediator. Navigate into the Palette pane and select the graphical mediators icons listed under Mediators section. Then drag and drop thePropertymediators onto the Design pane as shown below.

The parameters available for configuring the Property mediator are as follows:

-

Add the property mediator to capture the

datasetId,projectId,tableIdvalues. Please follow the steps given ingettabledetailsoperation section 3. -

Forward the backend response to the API caller. Please follow the steps given in section 4 in the

gettabledetailsoperation.

Configure a resource for the /runQuery operation¶

-

Initialize the connector. You can use the same configuration to initialize the connector. Please follow the steps given in section 1 for setting up the

initoperation to thegettabledetailsoperation. -

Set up the /runQuery operation. Navigate into the Palette pane and select the graphical operations icons listed under BigQuery Connector section. Then drag and drop the

/runQueryoperation into the Design pane. The/runQueryoperation runs an SQL query (BigQuery) and returns results if the query completes within a specified timeout.- projectId : The project ID of the requested table.

- kind : The resource type of the request.

- defaultProjectId : The ID of the project that contains this dataset.

- defaultDatasetId : A unique ID (required) for this dataset without the project name. The ID must contain only letters (a-z, A-Z), numbers (0-9), or underscores (_). The maximum length is 1,024 characters.

- query : A query string (required) that complies with the BigQuery query syntax.

- maxResults : The maximum number of rows of data (results) to return per page. Responses are also limited to 10 MB. By default, there is no maximum row count and only the byte limit applies.

- timeoutMs : Specifies how long (in milliseconds) the system should wait for the query to complete before expiring and returning the request.

- dryRun : If set to true, BigQuery does not run the job. Instead, if the query is valid, BigQuery returns statistics about the job. If the query is invalid, an error returns. The default value is false.

- useQueryCache : Specifies whether to look for the result in the query cache. The default value is true.

-

To get the input values in to the

runQuery, we can use the property mediator. Navigate into the Palette pane and select the graphical mediators icons listed under Mediators section. Then drag and drop thePropertymediators onto the Design pane as shown below.

The parameters available for configuring the Property mediator are as follows:

-

Add the property mediator to capture the

projectId,defaultDatasetIdvalue. Please follow the steps given ingettabledetailsoperation section 3. In this example,kindvalue is configured as bigquery#tableDataInsertAllResponse,queryvalue is configured as SELECT * FROM students,maxResultsvalue is configured as 1000,timeoutMsvalue is configured as 1000,dryRunvalue is configured as false anduseQueryCachevalue is configured as true. -

Forward the backend response to the API caller. Please follow the steps given in section 4 in the

gettabledetailsoperation.

Now you can switch into the Source view and check the XML configuration files of the created API and sequences.

bigquery-testAPI.xml

<?xml version="1.0" encoding="UTF-8"?>

<api context="/resources" name="bigquery-testAPI" xmlns="http://ws.apache.org/ns/synapse">

<resource methods="POST" url-mapping="/gettabledetails">

<inSequence>

<property expression="json-eval($.tableId)" name="tableId" scope="default" type="STRING"/>

<property expression="json-eval($.datasetId)" name="datasetId" scope="default" type="STRING"/>

<property expression="json-eval($.projectId)" name="projectId" scope="default" type="STRING"/>

<bigquery.init>

<apiUrl>https://www.googleapis.com</apiUrl>

<accessToken>ya29.a0AfH6SMA6j0L_cGNi0BpxXLGaYlUQUbkHpGY31iFpjz4VOlbx3PlP5XBWW9E5bvdqW7cu8kjxMqJ7WShYGxOooXNc20cnNkHOkfesaun6NnhA3omK8ERWKSfICJGucG1tp3P0mVWNtQ6M2ZdDgigQ-3gmB0Xtphj3Ovw</accessToken>

<clientId>392276369305-pg6a4bq41r79gsv3mdmd8vesscf477sf.apps.googleusercontent.com</clientId>

<clientSecret>UgtzggStea3Xfd9q7TUMeyNo</clientSecret>

<refreshToken>1//0gCwbRibyQinFCgYIARAAGBASNwF-L9IrO9590FKKiOro0UUEZEHD4DiG9or41nbIEmWOzsaM22btR4QLKXHfGMDDUWK2hrp5EBo</refreshToken>

<registryPath>{$ctx:registryPath}</registryPath>

<apiKey>XXXX</apiKey>

<callback>callBackFunction</callback>

<prettyPrint>true</prettyPrint>

<quotaUser>{$ctx:quotaUser}</quotaUser>

<userIp>{$ctx:userIp}</userIp>

<fields>{$ctx:fields}</fields>

<ifNoneMatch>{$ctx:ifNoneMatch}</ifNoneMatch>

<ifMatch>{$ctx:ifMatch}</ifMatch>

</bigquery.init>

<bigquery.getTable>

<datasetId>{$ctx:datasetId}</datasetId>

<projectId>{$ctx:projectId}</projectId>

<tableId>{$ctx:tableId}</tableId>

</bigquery.getTable>

<respond/>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

<resource methods="POST" url-mapping="/insertdetails">

<inSequence>

<property expression="json-eval($.tableId)" name="tableId" scope="default" type="STRING"/>

<property expression="json-eval($.datasetId)" name="datasetId" scope="default" type="STRING"/>

<property expression="json-eval($.projectId)" name="projectId" scope="default" type="STRING"/>

<property expression="json-eval($.jsonPay)" name="jsonPay" scope="default" type="STRING"/>

<bigquery.init>

<apiUrl>https://www.googleapis.com</apiUrl>

<accessToken>ya29.a0AfH6SMA6j0L_cGNi0BpxXLGaYlUQUbkHpGY31iFpjz4VOlbx3PlP5XBWW9E5bvdqW7cu8kjxMqJ7WShYGxOooXNc20cnNkHOkfesaun6NnhA3omK8ERWKSfICJGucG1tp3P0mVWNtQ6M2ZdDgigQ-3gmB0Xtphj3Ovw</accessToken>

<clientId>392276369305-pg6a4bq41r79gsv3mdmd8vesscf477sf.apps.googleusercontent.com</clientId>

<clientSecret>UgtzggStea3Xfd9q7TUMeyNo</clientSecret>

<refreshToken>1//0gCwbRibyQinFCgYIARAAGBASNwF-L9IrO9590FKKiOro0UUEZEHD4DiG9or41nbIEmWOzsaM22btR4QLKXHfGMDDUWK2hrp5EBo</refreshToken>

<registryPath>{$ctx:registryPath}</registryPath>

<apiKey>XXXX</apiKey>

<callback>callBackFunction</callback>

<prettyPrint>true</prettyPrint>

<quotaUser>{$ctx:quotaUser}</quotaUser>

<userIp>{$ctx:userIp}</userIp>

<fields>{$ctx:fields}</fields>

<ifNoneMatch>{$ctx:ifNoneMatch}</ifNoneMatch>

<ifMatch>{$ctx:ifMatch}</ifMatch>

</bigquery.init>

<bigquery.insertAllTableData>

<datasetId>{$ctx:datasetId}</datasetId>

<projectId>{$ctx:projectId}</projectId>

<tableId>{$ctx:tableId}</tableId>

<skipInvalidRows>true</skipInvalidRows>

<ignoreUnknownValues>true</ignoreUnknownValues>

<templateSuffix>{$ctx:templateSuffix}</templateSuffix>

<jsonPay>{$ctx:jsonPay}</jsonPay>

</bigquery.insertAllTableData>

<respond/>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

<resource methods="POST" url-mapping="/getdetails">

<inSequence>

<property expression="json-eval($.tableId)" name="tableId" scope="default" type="STRING"/>

<property expression="json-eval($.datasetId)" name="datasetId" scope="default" type="STRING"/>

<property expression="json-eval($.projectId)" name="projectId" scope="default" type="STRING"/>

<bigquery.init>

<apiUrl>https://www.googleapis.com</apiUrl>

<accessToken>ya29.a0AfH6SMA6j0L_cGNi0BpxXLGaYlUQUbkHpGY31iFpjz4VOlbx3PlP5XBWW9E5bvdqW7cu8kjxMqJ7WShYGxOooXNc20cnNkHOkfesaun6NnhA3omK8ERWKSfICJGucG1tp3P0mVWNtQ6M2ZdDgigQ-3gmB0Xtphj3Ovw</accessToken>

<clientId>392276369305-pg6a4bq41r79gsv3mdmd8vesscf477sf.apps.googleusercontent.com</clientId>

<clientSecret>UgtzggStea3Xfd9q7TUMeyNo</clientSecret>

<refreshToken>1//0gCwbRibyQinFCgYIARAAGBASNwF-L9IrO9590FKKiOro0UUEZEHD4DiG9or41nbIEmWOzsaM22btR4QLKXHfGMDDUWK2hrp5EBo</refreshToken>

<registryPath>{$ctx:registryPath}</registryPath>

<apiKey>XXXX</apiKey>

<callback>callBackFunction</callback>

<prettyPrint>true</prettyPrint>

<quotaUser>{$ctx:quotaUser}</quotaUser>

<userIp>{$ctx:userIp}</userIp>

<fields>{$ctx:fields}</fields>

<ifNoneMatch>{$ctx:ifNoneMatch}</ifNoneMatch>

<ifMatch>{$ctx:ifMatch}</ifMatch>

</bigquery.init>

<bigquery.listTabledata>

<datasetId>{$ctx:datasetId}</datasetId>

<projectId>{$ctx:projectId}</projectId>

<tableId>{$ctx:tableId}</tableId>

<maxResults>{$ctx:maxResults}</maxResults>

<pageToken>{$ctx:pageToken}</pageToken>

<startIndex>{$ctx:startIndex}</startIndex>

</bigquery.listTabledata>

<respond/>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

<resource methods="POST" url-mapping="/runQuery">

<inSequence>

<property expression="json-eval($.tableId)" name="tableId" scope="default" type="STRING"/>

<property expression="json-eval($.defaultDatasetId)" name="defaultDatasetId" scope="default" type="STRING"/>

<property expression="json-eval($.projectId)" name="projectId" scope="default" type="STRING"/>

<bigquery.init>

<apiUrl>https://www.googleapis.com</apiUrl>

<accessToken>ya29.a0AfH6SMA6j0L_cGNi0BpxXLGaYlUQUbkHpGY31iFpjz4VOlbx3PlP5XBWW9E5bvdqW7cu8kjxMqJ7WShYGxOooXNc20cnNkHOkfesaun6NnhA3omK8ERWKSfICJGucG1tp3P0mVWNtQ6M2ZdDgigQ-3gmB0Xtphj3Ovw</accessToken>

<clientId>392276369305-pg6a4bq41r79gsv3mdmd8vesscf477sf.apps.googleusercontent.com</clientId>

<clientSecret>UgtzggStea3Xfd9q7TUMeyNo</clientSecret>

<refreshToken>1//0gCwbRibyQinFCgYIARAAGBASNwF-L9IrO9590FKKiOro0UUEZEHD4DiG9or41nbIEmWOzsaM22btR4QLKXHfGMDDUWK2hrp5EBo</refreshToken>

<registryPath>{$ctx:registryPath}</registryPath>

<apiKey>XXXX</apiKey>

<callback>callBackFunction</callback>

<prettyPrint>true</prettyPrint>

<quotaUser>{$ctx:quotaUser}</quotaUser>

<userIp>{$ctx:userIp}</userIp>

<fields>{$ctx:fields}</fields>

<ifNoneMatch>{$ctx:ifNoneMatch}</ifNoneMatch>

<ifMatch>{$ctx:ifMatch}</ifMatch>

</bigquery.init>

<bigquery.runQuery>

<projectId>{$ctx:projectId}</projectId>

<kind>bigquery#tableDataInsertAllResponse</kind>

<query>SELECT * FROM students</query>

<maxResults>10000</maxResults>

<timeoutMs>10000</timeoutMs>

<dryRun>false</dryRun>

<useQueryCache>true</useQueryCache>

<defaultDatasetId>{$ctx:defaultDatasetId}</defaultDatasetId>

<defaultProjectId>{$ctx:defaultProjectId}</defaultProjectId>

<useLegacySql>{$ctx:useLegacySql}</useLegacySql>

</bigquery.runQuery>

<respond/>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

</api> <?xml version="1.0" encoding="UTF-8"?>

<api context="/resources" name="bigquery-testAPI" xmlns="http://ws.apache.org/ns/synapse">

<resource methods="POST" url-mapping="/gettabledetails">

<inSequence>

<property expression="json-eval($.tableId)" name="tableId" scope="default" type="STRING"/>

<property expression="json-eval($.datasetId)" name="datasetId" scope="default" type="STRING"/>

<property expression="json-eval($.projectId)" name="projectId" scope="default" type="STRING"/>

<bigquery.init>

<apiUrl>https://www.googleapis.com</apiUrl>

<accessToken>ya29.a0AfH6SMA6j0L_cGNi0BpxXLGaYlUQUbkHpGY31iFpjz4VOlbx3PlP5XBWW9E5bvdqW7cu8kjxMqJ7WShYGxOooXNc20cnNkHOkfesaun6NnhA3omK8ERWKSfICJGucG1tp3P0mVWNtQ6M2ZdDgigQ-3gmB0Xtphj3Ovw</accessToken>

<clientId>392276369305-pg6a4bq41r79gsv3mdmd8vesscf477sf.apps.googleusercontent.com</clientId>

<clientSecret>UgtzggStea3Xfd9q7TUMeyNo</clientSecret>

<refreshToken>1//0gCwbRibyQinFCgYIARAAGBASNwF-L9IrO9590FKKiOro0UUEZEHD4DiG9or41nbIEmWOzsaM22btR4QLKXHfGMDDUWK2hrp5EBo</refreshToken>

<registryPath>{$ctx:registryPath}</registryPath>

<apiKey>XXXX</apiKey>

<callback>callBackFunction</callback>

<prettyPrint>true</prettyPrint>

<quotaUser>{$ctx:quotaUser}</quotaUser>

<userIp>{$ctx:userIp}</userIp>

<fields>{$ctx:fields}</fields>

<ifNoneMatch>{$ctx:ifNoneMatch}</ifNoneMatch>

<ifMatch>{$ctx:ifMatch}</ifMatch>

</bigquery.init>

<bigquery.getTable>

<datasetId>{$ctx:datasetId}</datasetId>

<projectId>{$ctx:projectId}</projectId>

<tableId>{$ctx:tableId}</tableId>

</bigquery.getTable>

<respond/>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

<resource methods="POST" url-mapping="/insertdetails">

<inSequence>

<property expression="json-eval($.tableId)" name="tableId" scope="default" type="STRING"/>

<property expression="json-eval($.datasetId)" name="datasetId" scope="default" type="STRING"/>

<property expression="json-eval($.projectId)" name="projectId" scope="default" type="STRING"/>

<property expression="json-eval($.jsonPay)" name="jsonPay" scope="default" type="STRING"/>

<bigquery.init>

<apiUrl>https://www.googleapis.com</apiUrl>

<accessToken>ya29.a0AfH6SMA6j0L_cGNi0BpxXLGaYlUQUbkHpGY31iFpjz4VOlbx3PlP5XBWW9E5bvdqW7cu8kjxMqJ7WShYGxOooXNc20cnNkHOkfesaun6NnhA3omK8ERWKSfICJGucG1tp3P0mVWNtQ6M2ZdDgigQ-3gmB0Xtphj3Ovw</accessToken>

<clientId>392276369305-pg6a4bq41r79gsv3mdmd8vesscf477sf.apps.googleusercontent.com</clientId>

<clientSecret>UgtzggStea3Xfd9q7TUMeyNo</clientSecret>

<refreshToken>1//0gCwbRibyQinFCgYIARAAGBASNwF-L9IrO9590FKKiOro0UUEZEHD4DiG9or41nbIEmWOzsaM22btR4QLKXHfGMDDUWK2hrp5EBo</refreshToken>

<registryPath>{$ctx:registryPath}</registryPath>

<apiKey>XXXX</apiKey>

<callback>callBackFunction</callback>

<prettyPrint>true</prettyPrint>

<quotaUser>{$ctx:quotaUser}</quotaUser>

<userIp>{$ctx:userIp}</userIp>

<fields>{$ctx:fields}</fields>

<ifNoneMatch>{$ctx:ifNoneMatch}</ifNoneMatch>

<ifMatch>{$ctx:ifMatch}</ifMatch>

</bigquery.init>

<bigquery.insertAllTableData>

<datasetId>{$ctx:datasetId}</datasetId>

<projectId>{$ctx:projectId}</projectId>

<tableId>{$ctx:tableId}</tableId>

<skipInvalidRows>true</skipInvalidRows>

<ignoreUnknownValues>true</ignoreUnknownValues>

<templateSuffix>{$ctx:templateSuffix}</templateSuffix>

<jsonPay>{$ctx:jsonPay}</jsonPay>

</bigquery.insertAllTableData>

<respond/>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

<resource methods="POST" url-mapping="/getdetails">

<inSequence>

<property expression="json-eval($.tableId)" name="tableId" scope="default" type="STRING"/>

<property expression="json-eval($.datasetId)" name="datasetId" scope="default" type="STRING"/>

<property expression="json-eval($.projectId)" name="projectId" scope="default" type="STRING"/>

<bigquery.init>

<apiUrl>https://www.googleapis.com</apiUrl>

<accessToken>ya29.a0AfH6SMA6j0L_cGNi0BpxXLGaYlUQUbkHpGY31iFpjz4VOlbx3PlP5XBWW9E5bvdqW7cu8kjxMqJ7WShYGxOooXNc20cnNkHOkfesaun6NnhA3omK8ERWKSfICJGucG1tp3P0mVWNtQ6M2ZdDgigQ-3gmB0Xtphj3Ovw</accessToken>

<clientId>392276369305-pg6a4bq41r79gsv3mdmd8vesscf477sf.apps.googleusercontent.com</clientId>

<clientSecret>UgtzggStea3Xfd9q7TUMeyNo</clientSecret>

<refreshToken>1//0gCwbRibyQinFCgYIARAAGBASNwF-L9IrO9590FKKiOro0UUEZEHD4DiG9or41nbIEmWOzsaM22btR4QLKXHfGMDDUWK2hrp5EBo</refreshToken>

<registryPath>{$ctx:registryPath}</registryPath>

<apiKey>XXXX</apiKey>

<callback>callBackFunction</callback>

<prettyPrint>true</prettyPrint>

<quotaUser>{$ctx:quotaUser}</quotaUser>

<userIp>{$ctx:userIp}</userIp>

<fields>{$ctx:fields}</fields>

<ifNoneMatch>{$ctx:ifNoneMatch}</ifNoneMatch>

<ifMatch>{$ctx:ifMatch}</ifMatch>

</bigquery.init>

<bigquery.listTabledata>

<datasetId>{$ctx:datasetId}</datasetId>

<projectId>{$ctx:projectId}</projectId>

<tableId>{$ctx:tableId}</tableId>

<maxResults>{$ctx:maxResults}</maxResults>

<pageToken>{$ctx:pageToken}</pageToken>

<startIndex>{$ctx:startIndex}</startIndex>

</bigquery.listTabledata>

<respond/>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

<resource methods="POST" url-mapping="/runQuery">

<inSequence>

<property expression="json-eval($.tableId)" name="tableId" scope="default" type="STRING"/>

<property expression="json-eval($.defaultDatasetId)" name="defaultDatasetId" scope="default" type="STRING"/>

<property expression="json-eval($.projectId)" name="projectId" scope="default" type="STRING"/>

<bigquery.init>

<apiUrl>https://www.googleapis.com</apiUrl>

<accessToken>ya29.a0AfH6SMA6j0L_cGNi0BpxXLGaYlUQUbkHpGY31iFpjz4VOlbx3PlP5XBWW9E5bvdqW7cu8kjxMqJ7WShYGxOooXNc20cnNkHOkfesaun6NnhA3omK8ERWKSfICJGucG1tp3P0mVWNtQ6M2ZdDgigQ-3gmB0Xtphj3Ovw</accessToken>

<clientId>392276369305-pg6a4bq41r79gsv3mdmd8vesscf477sf.apps.googleusercontent.com</clientId>

<clientSecret>UgtzggStea3Xfd9q7TUMeyNo</clientSecret>

<refreshToken>1//0gCwbRibyQinFCgYIARAAGBASNwF-L9IrO9590FKKiOro0UUEZEHD4DiG9or41nbIEmWOzsaM22btR4QLKXHfGMDDUWK2hrp5EBo</refreshToken>

<registryPath>{$ctx:registryPath}</registryPath>

<apiKey>XXXX</apiKey>

<callback>callBackFunction</callback>

<prettyPrint>true</prettyPrint>

<quotaUser>{$ctx:quotaUser}</quotaUser>

<userIp>{$ctx:userIp}</userIp>

<fields>{$ctx:fields}</fields>

<ifNoneMatch>{$ctx:ifNoneMatch}</ifNoneMatch>

<ifMatch>{$ctx:ifMatch}</ifMatch>

</bigquery.init>

<bigquery.runQuery>

<projectId>{$ctx:projectId}</projectId>

<kind>bigquery#tableDataInsertAllResponse</kind>

<query>SELECT * FROM students</query>

<maxResults>10000</maxResults>

<timeoutMs>10000</timeoutMs>

<dryRun>false</dryRun>

<useQueryCache>true</useQueryCache>

<defaultDatasetId>{$ctx:defaultDatasetId}</defaultDatasetId>

<defaultProjectId>{$ctx:defaultProjectId}</defaultProjectId>

<useLegacySql>{$ctx:useLegacySql}</useLegacySql>

</bigquery.runQuery>

<respond/>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

</api>

Get the project¶

You can download the ZIP file and extract the contents to get the project code.

Deployment¶

Follow these steps to deploy the exported CApp in the integration runtime.

Deploying on Micro Integrator

You can copy the composite application to the <PRODUCT-HOME>/repository/deployment/server/carbonapps folder and start the server. Micro Integrator will be started and the composite application will be deployed.

You can further refer the application deployed through the CLI tool. See the instructions on managing integrations from the CLI.

Click here for instructions on deploying on WSO2 Enterprise Integrator 6

-

You can copy the composite application to the

<PRODUCT-HOME>/repository/deployment/server/carbonappsfolder and start the server. -

WSO2 EI server starts and you can login to the Management Console https://localhost:9443/carbon/ URL. Provide login credentials. The default credentials will be admin/admin.

-

You can see that the API is deployed under the API section.

Testing¶

Invoke the API as shown below using the curl command. Curl Application can be downloaded from here.

- The user sends the request to invoke an API to get created table details from the BigQuery.

Sample request

Save a file called data.json with the following payload.

{

"tableId":"students",

"datasetId":"Sample1",

"projectId":"ei-connector-improvement"

}

curl -v POST -d @data.json "http://localhost:8290/resources/getTable" -H "Content-Type:application/json"

// API callback

callBackFunction({

"kind": "bigquery#table",

"etag": "G5Yv0gFoLTD2gSToi5YPwA==",

"id": "ei-connector-improvement:Sample1.students",

"selfLink": "https://www.googleapis.com/bigquery/v2/projects/ei-connector-improvement/datasets/Sample1/tables/students",

"tableReference": {

"projectId": "ei-connector-improvement",

"datasetId": "Sample1",

"tableId": "students"

},

"schema": {

"fields": [

{

"name": "name",

"type": "STRING",

"mode": "NULLABLE"

},

{

"name": "age",

"type": "INTEGER",

"mode": "NULLABLE"

}

]

},

"numBytes": "0",

"numLongTermBytes": "0",

"numRows": "0",

"creationTime": "1592219906721",

"lastModifiedTime": "1592219906768",

"type": "TABLE",

"location": "US"

}

);

Sample request

Save a file called data.json with the following payload.

{

"tableId":"students",

"datasetId":"Sample1",

"projectId":"ei-connector-improvement",

"jsonPay":{

"json":

{

"name":"Jhone",

"age":"30"

}

}

}

curl -v POST -d @data.json "http://localhost:8290/resources/insertAllTableData" -H "Content-Type:application/json"

Expected Response

{

"kind": "bigquery#tableDataInsertAllResponse"

}

Sample request

Save a file called data.json with the following payload.

{

"tableId":"students",

"datasetId":"Sample1",

"projectId":"ei-connector-improvement"

}

curl -v POST -d @data.json "http://localhost:8290/resources/listTabledata" -H "Content-Type:application/json"

Expected Response

```json

// API callback

callBackFunction({

"kind": "bigquery#tableDataList",

"etag": "CddYdG3ttrhpWPEGTOpKKg==",

"totalRows": "0",

"rows": [

{

"f": [

{

"v": "Kasun"

},

{

"v": "25"

}

]

},

{

"f": [

{

"v": "Jhone"

},

{

"v": "30"

}

]

}

]

}

);``` 4. Run an SQL query (BigQuery) and retrieve details from BigQuery table.

Sample request

Save a file called data.json with the following payload.

{

"defaultDatasetId":"Sample1",

"projectId":"ei-connector-improvement"

}

curl -v POST -d @data.json "http://localhost:8290/resources/runQuery" -H "Content-Type:application/json"

{

"kind": "bigquery#queryResponse",

"schema": {

"fields": [

{

"name": "name",

"type": "STRING",

"mode": "NULLABLE"

},

{

"name": "age",

"type": "INTEGER",

"mode": "NULLABLE"

}

]

},

"jobReference": {

"projectId": "ei-connector-improvement",

"jobId": "job_YQS1kmzYpfBT-wKvkLi5uVbSL_Mh",

"location": "US"

},

"totalRows": "2",

"rows": [

{

"f": [

{

"v": "Kasun"

},

{

"v": "25"

}

]

},

{

"f": [

{

"v": "Jhone"

},

{

"v": "30"

}

]

}

],

"totalBytesProcessed": "30",

"jobComplete": true,

"cacheHit": false

}